In the modern world, we take for granted many things that are only possible in the cloud. We have quick scaling that allows us to add or remove resources that are needed, high performance and data security. All these things are enabling us to make our data flow more efficient with the cloud. But what do we mean by data flow and how it benefits you?

What is data flow?

Data is the lifeblood of any organisation or enterprise. Without it, you cannot complete any tasks that are valuable to your business – product development, sales & marketing, management or other critical processes.

We’ll be using some geodata-related technologies as an example in this blog since we have quite a sophisticated understanding of them. Let’s say you are in the need of publishing your organisation’s spatial data via an open API. You would be using this data yourself in your daily activities and other stakeholders would greatly benefit from getting their hands on this dataset as well.

Data flow means utilizing data between all of these processes efficiently.

Setting up an efficient data flow

Imagine that you have an open API that is publishing spatial data from your internal systems. This data is then consumed by various applications within your organisation (or maybe even external organisations and enterprises). What can happen in this scenario is that one application will use up all the available resources in the cluster and publish too many requests as a result.

Let’s say that your service gets national coverage in the news, and people flock to see it for themselves. A normal onsite server would most likely crumble in a matter of minutes, but cloud-based solutions can just react to this by upscaling. This is a realistic scenario which happened a few years ago, and we actually wrote a blog about this!

Besides great flexibility, the cloud also offers a vast amount of storage space if needed. You might want to consider storing some large datasets in “cold storage” where you basically pay cents a year to have the data. Retrieving the data might cost a couple of euros, but it is still way cheaper than alternative storage methods. So maybe deposit your large dataset to the cold storage, and only retrieve what is needed with flexibility.

AWS vs. Azure vs. GCP

Cloud providers like Amazon Web Service (AWS), Microsoft Azure and Google Cloud Platform provide scalable computing resources on demand. AWS offers storage options like Amazon Simple Storage Service (S3) and Amazon Glacier. Google and Azure have similar storage options as well, so basically, all three have the same sort of capabilities.

The best choice will completely depend on your needs and budget of course. If you have a relatively small project with low traffic you won’t need a sophisticated solution as a Fortune 500 company, but you’ll still get just as efficient results as larger organizations! Smaller-scale projects might be better suited for a free tier service provided by AWS or GCP.

For data storage, AWS Glacier might be a good option in the long term. You can store data first to the S3 and move it to Glacier later if you access it less frequently and want to save on costs.

Azure and GCP have similar options, but the storage and pricing have a small variance among the competitors.

So ultimately the choice depends on the project you’re working on, and what service you are accustomed to using previously.

Containers work in several environments – Dock it and good to go!

Containers can be used in many environments from local to remote environments. As a lot of people use Containerisation technology, you might need to create a containerized application to work in remote environments. We wrote a post about this a while ago as well – have a look at it here: https://www.spatineo.com/how-aws-enables-automation-of-publishing-geospatial-data/

Geospatial data in the cloud

At some point, you might want to move some of your geospatial data into the cloud since it’s ultimately the superior option in price and efficiency-wise. Open-source technologies help with this.

You can use Geoserver to store your data in the cloud. It’s free and open-source software so it is very accessible and a great alternative to other options.

Your data is ingested by the backend of your choice. It can be a relational database management system such as MySQL or Postgres. It can also be a NoSQL database such as MongoDB Atlas.

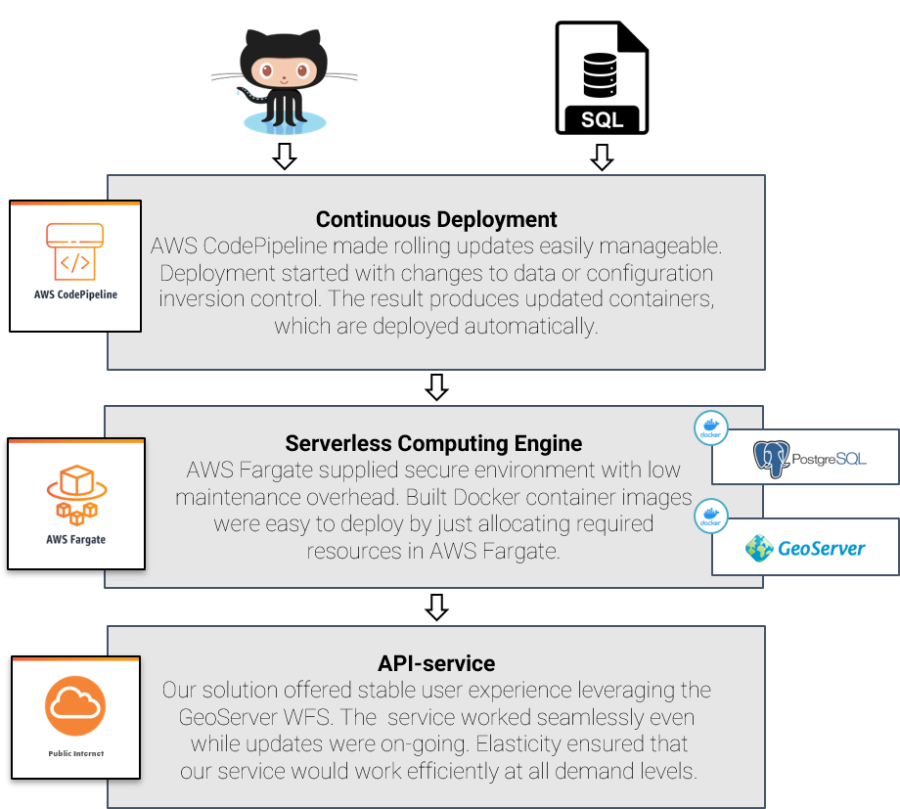

A method of our choice has been for example the following: wrap a GeoServer spatial data API server application and the PostgreSQL/PostGIS database into their own Docker containers and set up an automation pipeline to deploy them into the Amazon cloud.

This is just one method we’ve accomplished with a well-polished spatial data pipeline or “data flow” to the cloud, and we are currently building many different variations of this for our customers. In case you are interested in getting your data to flow efficiently, feel free to send us a message!

Want to get your data flowing with ease? Get in touch with us now!

-

How Modern Analytics Tools Improve Open Data ServicesModern analytics tools can illuminate how geospatial APIs are used. They can reveal which services are most popular, what data is acquired through the API, the geographical areas of interest for those requests, and the origin of these requests. Additionally, they can monitor service availability, track downtimes, and identify performance […]

-

Spatineo Building NATO Standards Compliant Metadata Capability for the Finnish Defence ForcesEnsuring good and efficient geospatial data management is crucial for successful training, planning and operations in the defence sector. The ability of NATO members to exchange geo-information in a secure and standards compliant way is key to successful and timely collaboration and building cross-national operational capability. Spatineo continued successful collaboration […]

-

New Age of Data Security: AI’s Role in Enhancing FTIA’s Digital Twins’ CybersecuritySpatineo proudly facilitated the Finnish Transport Infrastructure Agency’s (FTIA) workshop, focusing on the future of digital twins and information security. The workshop aimed to innovate AI-based solutions to enhance the cybersecurity of digital twins. Leveraging Spatineo’s expertise in digital infrastructure and data flows, we explored FTIA’s innovative plans for digital […]

-

Spatineo and Elenia Join Forces to Create a Groundbreaking Digital Service: Capacity MapSpatineo developed an interactive electrical grid Capacity Map to simplify communication between Elenia, their project developers and customers.

-

Exciting News: Spatineo Inc. Awarded NRCan Tender for Geospatial Web Harvester Development and OperationsWe are thrilled to announce that Spatineo Inc. has been awarded both Stream 1 and Stream 2 of the tender by Natural Resources Canada (NRCan) for developing and operating the “Geospatial Web Harvester Development and Operations” project. The contract value is CAD 213 570.00. We are happy to continue working […]

-

GIS & CYBERCybersecurity in the Geospatial Industry is Still in its Infancy The headline might seem sensational, but there’s a good reason for it: Cybersecurity is on shaky ground when it’s not given enough thought, and the possibility of threats is not recognized or considered in service design and data handling. Information […]